The

Here is the 22U HP server rack I picked up. It is on casters, so mobility is great should there ever be a need to move it. I am not in a HUGE rush, but a future project will be to cover the bottom space, likely with a thin sheet of plywood, or something like that, then to incorporate some type of filter media to keep the equipment inside clean.

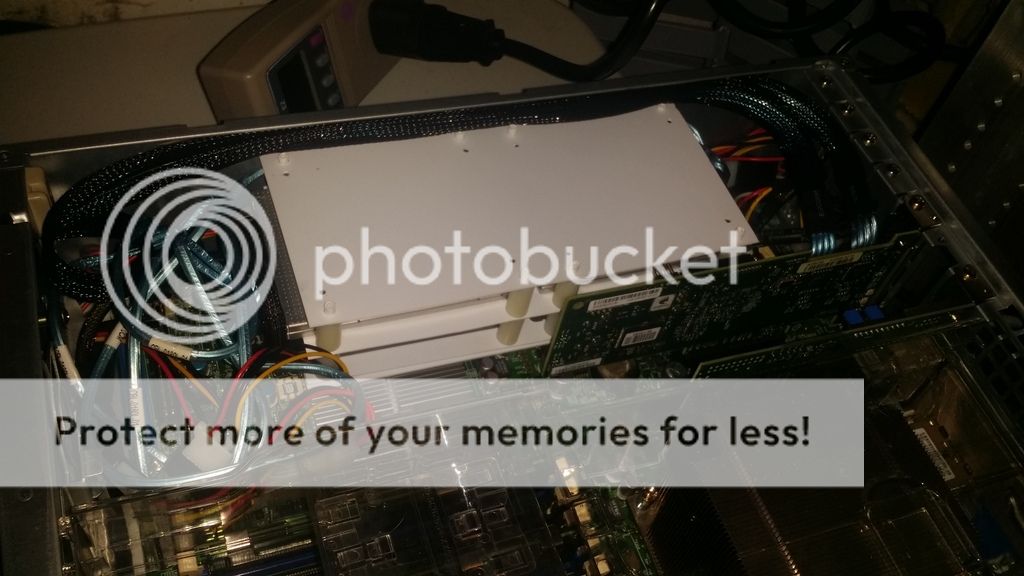

Here is the pretty much completed hard drive caddy. It has the two OCZ Agility 60 gig's on the bottom. One will be the OS, and the other one I am thinking I will keep as a copy of the OS drive, NOT IN RAID 1, just as a copy that I can move to should anything happen to the main drive. The middle shelf is dual Toshiba Q Series HDTS225XZSTA - 256 Gig drives. These will both have an 8 gig partitions, mirrored for ZIL. Will have the remainder of one of the drives for L2ARC. I am not quite sure what to do with the other remaining ~240 gig partition, but I am sure I will figure out something.

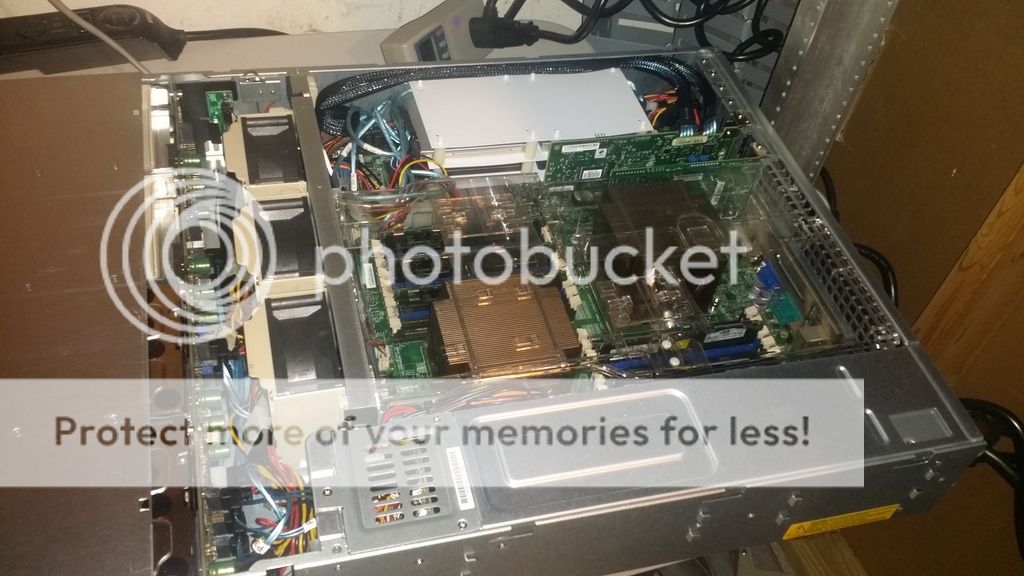

Here is the fully trimmed hard drive caddy inside of the server. There is PLENTY of room for it inside of the server. It will be a bit tight with drives on the top shelf, but it will be manageable.

NOTE - The Raid card still makes me nervous with the bend radius of the cable. I may end up finding a new home for this one and pick up one that has the SFF connectors on the end of the card facing INTO the chassis rather than facing up, but I have to look into this further. I honestly haven't done anything with any of the hot swap drives to see if the the cable bend down as pictured would have any effect on the connectivity of the drives.

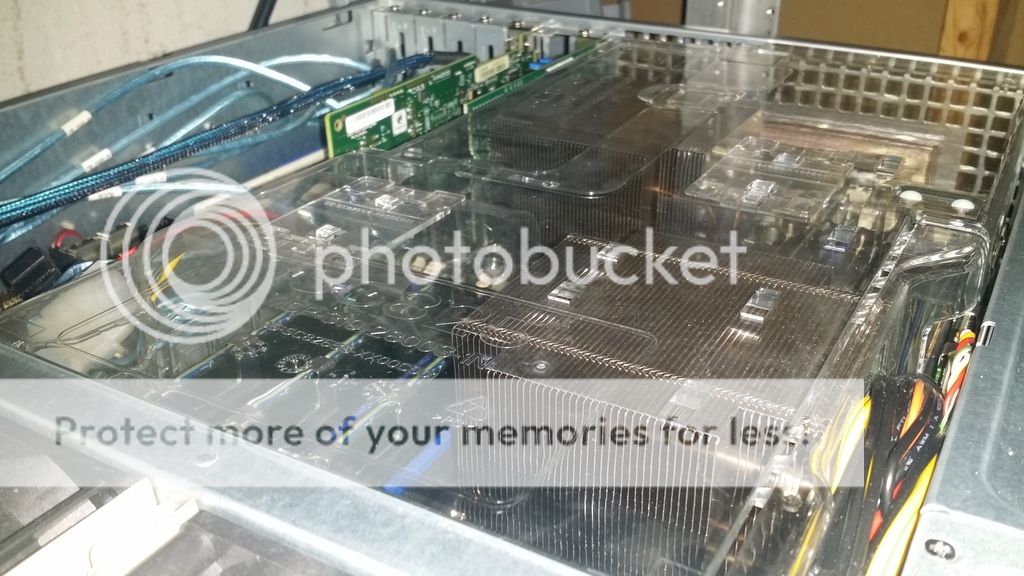

Here are the CPU heat sinks. They don't have fans on them, but there are just about 2U tall. they go from the CPU to just under the plastic cover that keeps airflow within the CPU lane. There are 3x 80 mm PWM fans which you can see in the lower left portion on the frame.

Here is the memory that the server came with. I will be looking into increasing this amount of memory, as ZFS recommends 1 Gig of RAM for each TB of storage space. I am not sure if that is for TOTAL capacity, or USED capacity, but I figure it would be best to just max out what I can in the beginning so I don't have to take if offline if it isn't needed. Plus I will need some RAM for the OS, and any virtual machines running. For now, I am thinking of doubling to 48 Gigs, for under $180 shipped. Not quite ready to pull the trigger on the memory yet, but I have the funds.

Memory:

Nanya - NT4GC72B4NA1NL-CG

Speed - DDR3-1333 PC3-10600 667MHz (1.5ns @ CL = 9)

Organization - 512Mx72

Power - 1.5V

Contacts - Gold

I have Ubuntu server 14.04 installed at this point, but not much else. I have only run a few commands to update the server, but I still don't know a whole lot about what I am doing. I have LOTS of reading left to do.

apt-get update

apt-get upgrade

I can't recall the other few commands I have run so far, but I also installed and updated ebox. My understanding is that it allows for web-base administration of the Ubuntu server, but perhaps I am wrong. For the life of me I can't figure out how to get it running, but again, more reading to do.