soarwitheagles

Lookin' for higher ground

- Messages

- 1,111

- Location

- Sacramento

That is quite nifty! I knew there was a way to run one or the other, just mistaken in thinking you had to manually switch between integrated and discrete.

I am curious as to what the power draw difference is going to be though, might be something worth testing for techies everywhere if you have a way to measure your system's power draw.

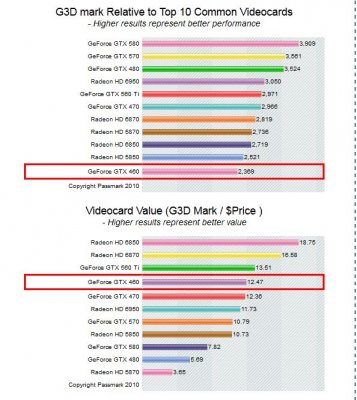

It puts your discrete GPU into idle mode, which should mean its still drawing roughly 160-170w (source | source).

So the question becomes: with your GPU always drawing idle power, is your integrated graphics really more power efficient at low-end tasks?

I'm guessing the answer is probably "yes" otherwise they wouldn't have added it into the motherboard, unless its just a total gimmick (yeah, I'm a little cynical).

Yes, it sure sounds nice. I do have a couple of those Kill A Watt Electricity Usage Monitors so I will test and post the wattage usage results comparing integrated and discrete after I build this new rig. On the other hand, hopefully I can find the answer before I build this new rig so that if it is a gimmick, I can save myself the heart ache and just build a normal machine.

Either way, I fully intend to discover if this really is an energy saving bell and whistle.

Thanks for those url's, but I am not so sure they are super accurate. The reason I question the accuracy and validity of the wattage ratings is because I have already tested both rigs using the Kill A Watt Electricity Usage Monitor and nothing is over 150 watts when the machine is in idle mode....Example: I just plugged in my 2500k rig and at idle the full amount of watts the entire machine is pulling is only 79w at idle and a massive 92 watts when playing IRobot digital movie....Not sure how my video card can be pulling 162 watts at idle...it doesn't make sense to me....

EDIT: Maybe I just discovered the true wattage draw at idle on the 6870...

I see another area that says this:

Average IDLE wattage for 6870 ~ 19W

19 watts is not bad at all! I think this could save me money over the long term...

Soar